Deepfake technology has advanced rapidly, making it increasingly difficult to distinguish real from fake. AI-powered software can generate hyper-realistic videos and voices, raising concerns about privacy, misinformation, and cybersecurity. In this blog, we’ll explore how deepfake technology works, its risks, and ways to protect yourself in 2025.

What is Deepfake Technology?

Deepfake technology uses artificial intelligence and machine learning to manipulate or synthesize video and audio, creating highly convincing fake content. By training neural networks on vast datasets, AI can replicate facial expressions, voices, and mannerisms of real people with alarming accuracy.

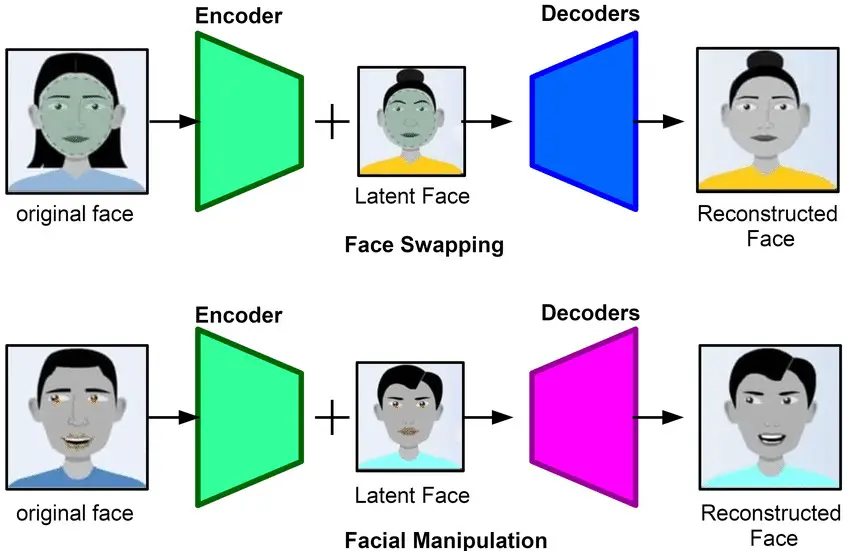

How Deepfakes Are Created

Deepfakes rely on GANs (Generative Adversarial Networks), where two AI models compete:

- Generator: Creates fake content.

- Discriminator: Tries to detect the fake.

Over time, the generator improves, making deepfakes harder to identify.

The Growing Threat of Deepfake AI Manipulation

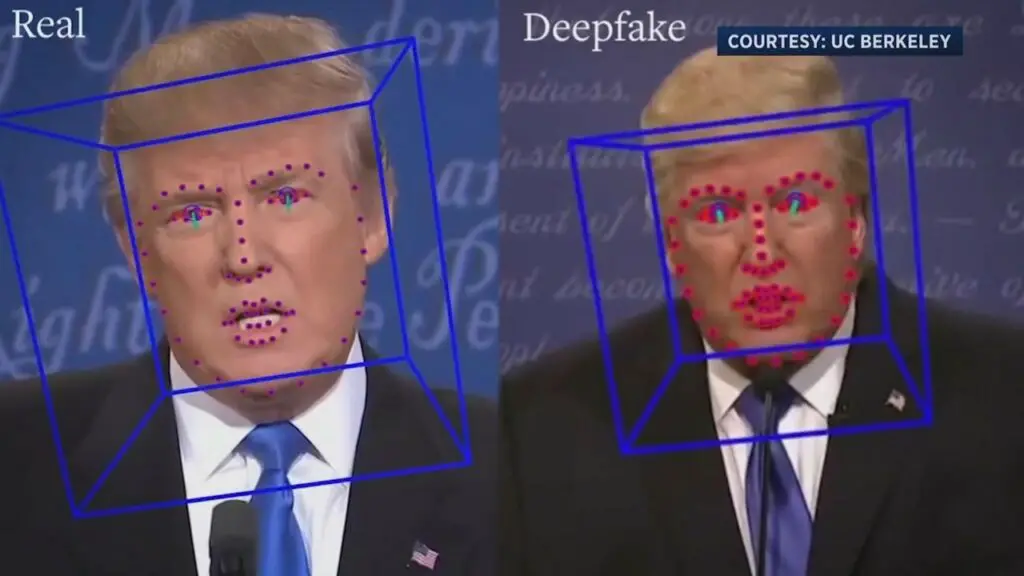

1. Political and Social Manipulation

Deepfakes can spread misinformation by making political figures appear to say or do things they never did, influencing public opinion.

2. Financial & Cybersecurity Risks

- Fraudsters use deepfake audio/video to impersonate CEOs and executives.

- Fake identities can bypass biometric security systems.

3. Privacy & Personal Identity Theft

- Deepfake scams target individuals, creating fake content for blackmail.

- AI can mimic real people’s voices in scam calls.

How to Detect & Protect Against Deepfakes

1. Look for Visual & Audio Distortions

- Blurred edges, unnatural blinking, and mismatched lip-syncing.

- Robotic or inconsistent voice tone.

2. Use AI Detection Tools

- Microsoft’s Deepfake Detection AI

- Deepware Scanner for video analysis.

3. Strengthen Cybersecurity Measures

- Use two-factor authentication (2FA).

- Be cautious of unsolicited video messages.

📌 Outbound Link: For more cybersecurity tips, visit StaySafeOnline.

Conclusion

Deepfake technology is both fascinating and dangerous. While it has legitimate uses in entertainment and research, its potential for misinformation, fraud, and privacy invasion is a growing concern. As AI improves, staying informed and using detection tools is essential for protection.