In the digital age, information spreads faster than ever—but not all of it is true. In 2025, AI-generated fake news has become one of the biggest threats to online trust and public awareness. With generative AI tools capable of producing highly convincing images, videos, and articles, the fight against disinformation is entering a critical phase. This is where disinformation security comes into play.

What Is Disinformation Security?

Disinformation security refers to the technologies, policies, and strategies designed to detect, prevent, and counteract the spread of false or misleading information—especially when it’s created by AI. This includes:

- AI detection systems

- Digital watermarking

- Fact-checking algorithms

- User education campaigns

These tools are essential for maintaining information integrity in a world where fake news can spread globally in minutes.

How AI Is Generating Fake News

AI has advanced to the point where it can:

- Generate realistic deepfake videos.

- Create entirely fake news articles that sound credible.

- Clone voices for fake audio recordings.

- Fabricate social media accounts to amplify false narratives.

In 2025, malicious actors use these tools to influence public opinion, sway elections, and manipulate markets.

Top Disinformation Security Tools in 2025

To combat the surge in fake content, several new technologies are leading the charge:

- Reality Defender: A browser extension that flags manipulated media.

- Microsoft’s Video Authenticator: Detects deepfake artifacts in videos.

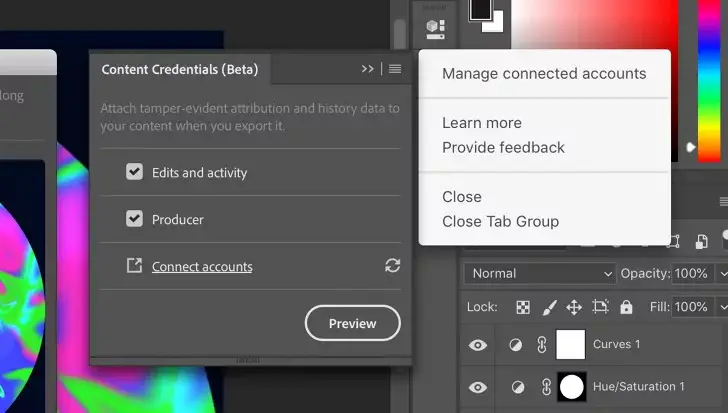

- Content Credentials (by Adobe): Uses metadata to confirm media authenticity.

- Hive Moderation: AI moderation API to detect offensive or fake content in real time.

These tools help content platforms, news sites, and users verify the legitimacy of what they’re viewing.

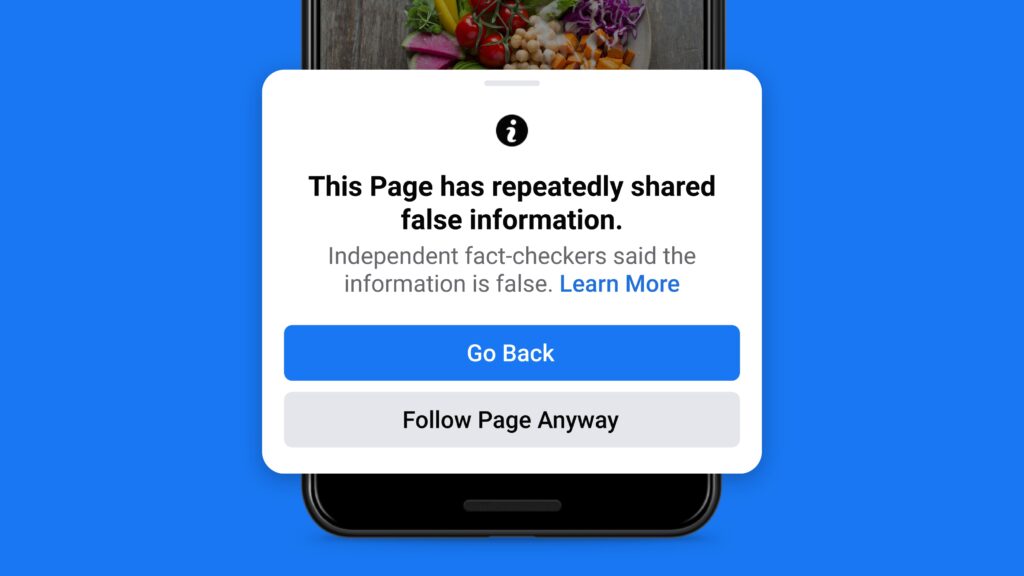

The Role of Social Media Platforms

Major platforms like X (formerly Twitter), Meta, and YouTube are enhancing their disinformation security frameworks. In 2025, they are:

- Using AI to detect bot networks.

- Working with fact-checking organizations.

- Applying content warnings and context labels.

- Promoting media literacy among users.

Government & Policy Efforts

Governments worldwide are enforcing new regulations:

- AI Content Labeling Laws: Require disclosure when content is AI-generated.

- Digital Provenance Standards: Track the origin of digital content.

- Fines for Disinformation Campaigns: Legal penalties for platforms that fail to act.

Public-private collaboration is key in building resilient information systems.

How You Can Protect Yourself

Users also play a critical role in disinformation security. Here’s how to stay safe:

- Double-check sources before sharing.

- Use fact-checking sites like Snopes and PolitiFact.

- Install browser extensions that detect manipulated content.

- Report suspicious content on social media.

Conclusion: Building a Smarter, Safer Internet

As AI evolves, so must our defenses. Disinformation security in 2025 is more important than ever. By combining smart technology, regulation, and user awareness, we can curb the spread of AI-generated fake news and ensure the digital world remains a source of truth—not manipulation.

Want to chat? Contact us here!