Neuromorphic computing is no longer a futuristic concept—it’s here and evolving rapidly. Inspired by the structure and function of the human brain, neuromorphic computing is revolutionizing how machines learn, adapt, and process information. In 2025, this brain-inspired technology is paving the way for faster, more energy-efficient, and highly intelligent computing systems.

What Is Neuromorphic Computing?

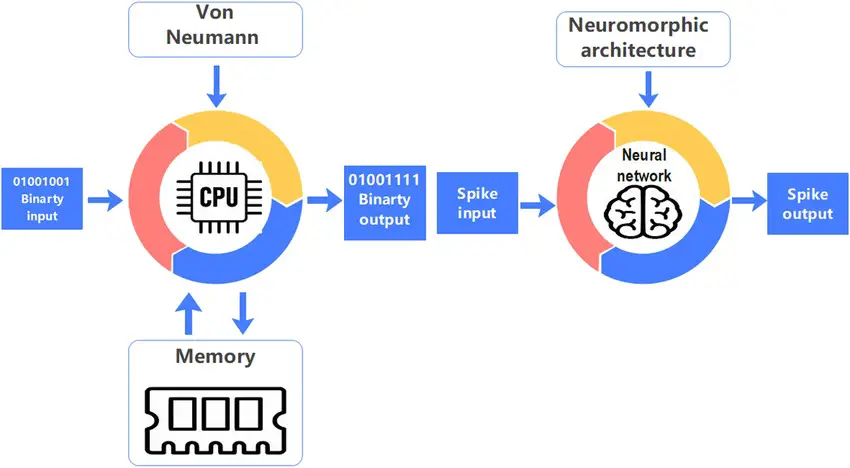

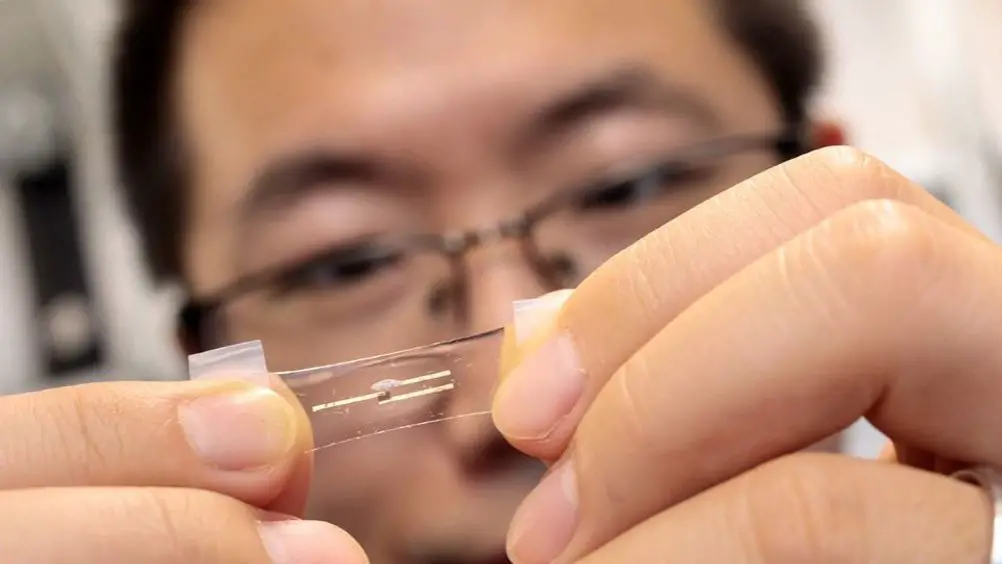

Neuromorphic computing refers to the design of computer systems that mimic the architecture and processing methods of the human brain. Unlike traditional computers that use binary logic, neuromorphic systems use artificial neurons and synapses to process information in parallel, similar to how our brain works.

This innovation allows neuromorphic computers to perform complex tasks such as pattern recognition, decision-making, and sensory data processing with remarkable speed and energy efficiency.

Want to learn more? Click here!

Why Mimicking the Human Brain Matters

Mimicking the human brain through neuromorphic computing offers several advantages:

- Low Power Consumption: These systems consume far less power than conventional CPUs or GPUs.

- Speed and Efficiency: They process tasks faster by parallelizing operations.

- Adaptive Learning: Neuromorphic chips can learn from their environment, making them ideal for real-time applications like robotics and edge AI.

Neuromorphic Computing vs Traditional AI Architectures

While traditional AI relies on cloud-based, power-hungry data centers, neuromorphic computing brings AI to the edge. This shift reduces latency and enhances privacy, as processing happens locally. The ability of neuromorphic systems to operate without constant cloud connectivity gives them a unique edge in mobile, wearable, and IoT applications.

Top Neuromorphic Projects Leading in 2025

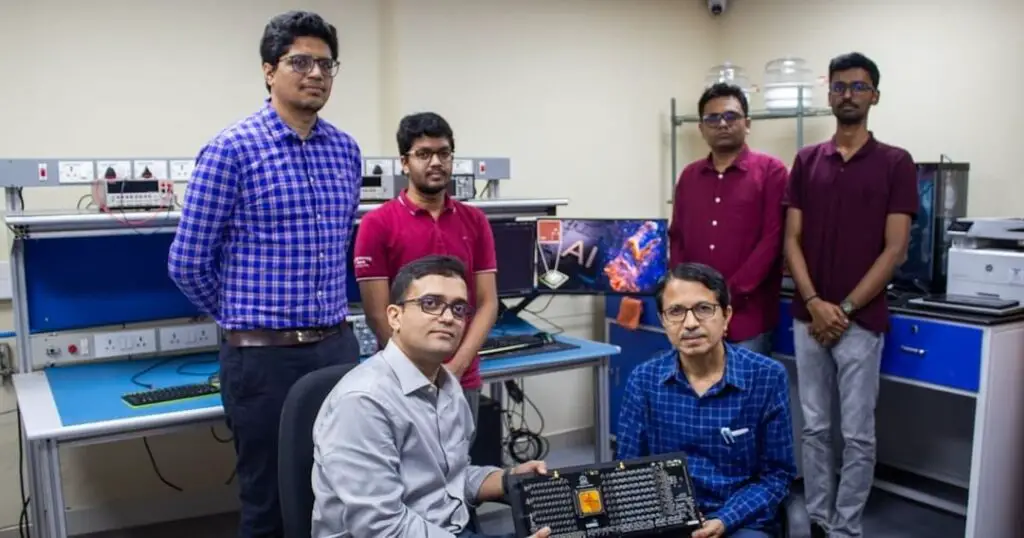

Several groundbreaking projects and companies are spearheading the development of neuromorphic computing:

- Intel’s Loihi 2: A second-generation neuromorphic chip that supports on-chip learning with unprecedented energy efficiency.

- IBM’s TrueNorth: A brain-like chip with 1 million neurons and 256 million synapses.

- SynSense: A startup focused on neuromorphic vision sensors and AI edge computing.

These innovations showcase the real-world potential of mimicking the human brain in silicon.

Applications of Neuromorphic Computing in 2025

Neuromorphic computing is being deployed across industries:

- Healthcare: For rapid diagnostics and neural prosthetics.

- Autonomous Vehicles: Enhancing real-time decision-making and perception.

- Smart Devices: Enabling always-on voice assistants and low-latency AI.

- Security: For biometric recognition and surveillance analysis.

Challenges in Scaling Neuromorphic Systems

Despite its potential, neuromorphic computing faces challenges:

- Hardware Complexity: Building brain-like chips is intricate and costly.

- Software Compatibility: Traditional software must be re-engineered to work with neuromorphic architectures.

- Standardization: The industry lacks common benchmarks and protocols.

Still, the progress made in 2025 is promising, with more investment pouring into neuromorphic research and development.

Conclusion: The Future of Neuromorphic Computing

Neuromorphic computing is mimicking the human brain with greater accuracy and efficiency than ever before. In 2025, it is set to revolutionize how we approach AI, robotics, and computing as a whole. As the technology matures, we can expect smarter, more adaptive machines that learn and think more like humans—without burning through energy or time.

Want to chat? Contact us here!